Blog

December 25, 2025

The Periodic Table of Data

Sitting by the fire this Christmas morning thinking about data governance systems (DGS), and I couldn’t help but visualize raw data (or “original records” in GXP language) as elements in the periodic table. That periodic table is what hooked me as a kid on the scientific path. It was a turning point for me, as everything we see, taste, smell and even think is based on various combinations of these basic elements combining and interacting in truly impressive fashion. It’s amazing…

We might think of raw data within our organizations in a similar manner. Every action we take, decision we make, and corporate strategy we implement should be based on the exact same pattern of combination and interaction. The problem is most of our data remains in the “periodic table” format – with few combinations and interactions. We see data as individual elements in the table, and then make decisions largely on ‘theory’ of what the data is telling us. The problem here is that theory is not the most effective way of making scientific judgements surrounding medical products. Best would be to run the experiment – see how the data ‘combines and reacts’ through analysis and/or visualization.

One of the earliest and simplest visualization tools is the control chart, thought to have been first used extensively in the 1920’s at Bell Telephone Laboratories. This tool uses two elements (e.g., defects vs. date) and combines these elements together to form a more complex compound that can perform some useful function for quality and the business, such as hydrogen and oxygen combining to form water – which has incredible function. Alone, these elements have little function in the world, but combined they change everything. Finding the most useful combinations in our data sets can result in function, and putting these functions to work through continuous improvement is what will separate the great companies of the next generation from the rest. But how do you do it?

Data Value via the DGS. From data -> risk -> value using the tools outlined in ICH Q8, Q9 and Q10. Are you ready?

Pete

December 22, 2025

The DGS Experience

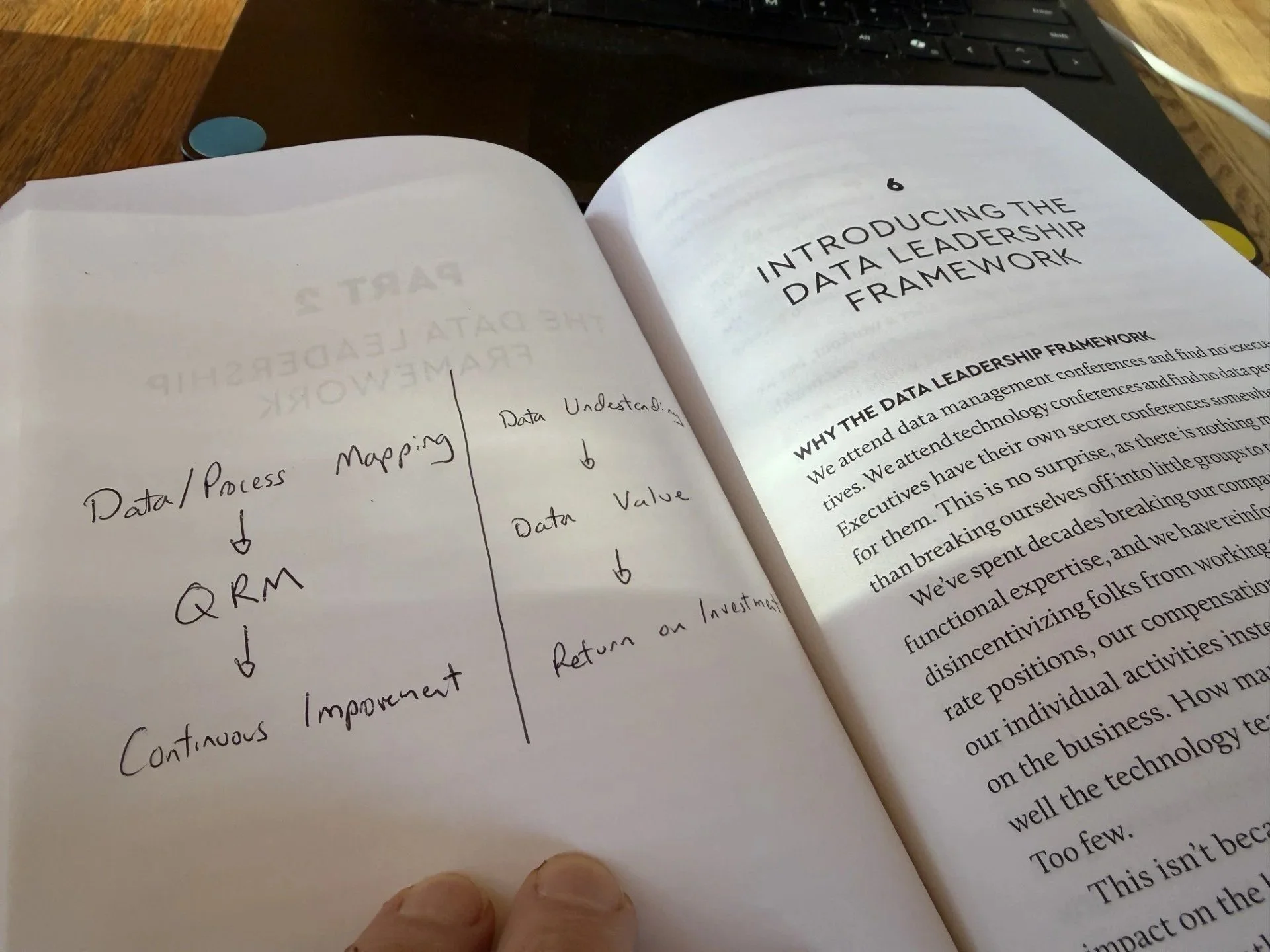

It’s a beautiful day here in Southern Colorado, and I spent the morning in front of the fire reading chapter 6 of one of my favorite books on data governance: “Data Leadership for Everyone” – Anthony J. Algmin. If you haven’t had a chance to read it – I highly recommend bumping it up on your 2026 queue!

Chapter 6 [and really the book in general] speaks to the fact that data governance without leadership and a clear goal to establish ROI assures failure. This takes vision and planning. The most important ingredient in a data governance system (DGS) is not actually the data… Data is needed [obviously], but the most important factor is establishing ‘data value’ and ROI through people’s participation in the DGS. If no beneficial actions come from the DGS (other than some “compliance satisfaction”) and passing an internal audit, then the whole thing was a massive capital sink and will forgotten by 2027. The term DGS will be viewed as negatively as the ALCOA project, which began in 2015 and piled layers of additional work on our operations staff with little ROI from the quality or business perspective.

Success in our next endeavor (the DGS) will require a mix of senior leaders, middle managers and front-line operations staff all establishing a common aim other than “compliance”. In the GXP world, a DGS is not optional, as it is explicitly expected per the EU GMP Chapter 4 [revision] – but what is a DGS?

A DGS is a theory… a way of thinking and acting that goes way beyond SOPs and compliance training. It requires top management to establish a common aim throughout the organization: continuous improvement, which is then executed throughout the organization through the GXP framework: establish the meaning/value of our data (ICH Q9) and then act on that data (CAPA) to make a better product at a lower cost… this also happens also to align perfectly with patient safety – hence in theory this should be an easy adjustment for us in the GXP universe. But I am 100% convinced that this will depend on your senior leadership. As I have mentioned previously in this blog, if you want to enjoy meaning in labor and a great place to work, make sure your leadership is aligned with this goal – otherwise get your resume out there. It is not your job to convince management that a DGS is a worthwhile endeavor. Who wants to swim through the swamp of patchwork CAPAs, never ending deviations, and metrics that are designed to place blame on employees instead of leaders?

There are various levels of a functioning DGS, from basic to advanced. Any organization can establish a fake DGS for regulatory inspection, where beautiful SOPs written by consultants shine brightly from the screen in the conference room, perhaps even a fancy PPT is presented with AI generated images and buzz words… The inspector is initially impressed, but then disappointed once they realize data remains siloed and individual departments struggle to understand what to do with all these audit trails and how they are at all connected to the product/patient. Some days, if they don’t have time – they just skip the review… I fully understand. Don’t ask me to perform a task without understanding the meaning – that is dehumanizing.

The goal of a DGS as outlined in the GMP regulation was never intended to be a “compliance activity” – like old school 1970’s regulations… This is a new era of regulation, where requirements are aligned with good practices from other industries who make excellent products at low costs and have high levels of worker satisfaction in labor.

To get started here are three simple bullets that summarize the journey as outlined in our GXP guidance and regulation:

Data/Process Mapping

Data/Process Risk Assessment

This exercise establishes “data value”

What to review, how often, and its importance

Continuous Improvement via CAPA

This demonstrates ROI from the DGS and engages employees… without continuous improvement as the final step: expected and measured [metrics] by senior leaders, the DGS will wilt faster than a flower in the desert

Once your senior leaders have established the end goal of the DGS, and have provided usable tools and clear metrics – the project can get started and will accelerate quickly; with success [almost] guaranteed.

Pete

November 27, 2025

When to CAPA?

This is an age-old question that has introduced serious confusion and difficulties for GMP generations, and is highlighted within this FDA Form 483 from early 2025. Let’s discuss a new & modern strategy in this blog entry…

In a modern quality system, a CAPA should never be initiated without sound scientific rationale, which can be found in a documented risk assessment. This risk assessment is key. Process/workflow changes can be disruptive, require extensive re-training of staff, and introduce confusion when requirements are continuously altered within daily routine tasks. The result is an increase in mistakes; the opposite desired outcome.

So… when is a CAPA deemed necessary? This really depends on the maturity of the site’s Quality System. For example, if detailed workflow-level risk assessments have been established, predicting errors along with an assessment of risk (severity * vulnerability), CAPAs should be reserved only for systemic, repetitive, or high-severity errors. In all other cases, [which make up the vast majority of our deviations], mistakes (deviations) may be considered common-cause (we knew that was going to happen based on vulnerabilities within the workflow). A CAPA may not be required when low-severity errors occur, unless a trend or widespread scope is identified.

Common-cause variation could be defined as “the inherent, random, and predictable fluctuation that exists in any process due to its design.” I argue that this predictable fluctuation also applies to human errors, which are likely the largest category of deviations within your program. If we have identified and assessed potential human factor hazards and proactively determined the impact of such errors (e.g., low/medium/high), and regularly trend such errors with granularity, we have sound scientific rationale available during the FDA inspection to justify no CAPA.

In the FDA Form 483 example above, the site would have needed to provide the investigator with the scientific rationale to justify no CAPA; with that rationale demonstrating that the error did not fall into one of the three categories mentioned above (systemic, repetitive, high-severity). But without that… no dice. It is of critical importance that the site grasps the difference between a proactive and reactive risk assessment. In a modern quality system, proactive risk assessments are available to demonstrate a state of control (validation), and then utilized as a base for reactive risk assessments performed during the deviation exercise. Note that the severity dimension of risk is utilized as the primary factor when evaluating the need for a CAPA. If a CAPA is then deemed necessary, the site has 3 primary options, arranged here in order of priority: 1) re-design the hazard out of the workflow, 2) reduce the vulnerability dimension via the addition of technical/engineering solutions, or 3) simplify and streamline. But…. where does this leave the addition of procedural controls, you might ask? These are a last resort and should be avoided whenever possible as they do not reduce vulnerability - a more likely means to reduce mistakes is via simplification and streamlining as outlined in option #3, even though vulnerability of the hazard occurring remains the same.

Without a proactive quality system established with comprehensive workflow-level risk assessments, however, it may be impossible to demonstrate such rationale within each deviation record due to limited resources/time – hence a burdensome reactive quality system emerges where CAPAs are always required for any error regardless of their risk, scope and/or frequency, because the site has no other option available. This is a sad situation, and makes it nearly impossible for the site to achieve its common aim – with heavy waste incurred as folks scramble with re-trainings, more errors, and employee disengagement.

Pete

November 22, 2025

Simplify and Streamline

Human-related mistakes during production or testing can often lead to loss in confidence in GXP data sets. Sometimes, these result in product recall, regulatory action, drug shortage, or other consequences we would very much like to avoid. Most mistakes are concentrated in those areas of our processes that are highly manual and rely on the ability of the human to perform the action “right first time”, often within a high-pressure environment with multiple and continuous distractions. As a result, we have implemented the concept of “witnessing” or “verification” to reduce the risk that any mistake will go undetected. In theory, this makes total sense – but in reality, I have my doubts. Let’s break it down:

First and foremost, in my experience most companies do not have nearly enough resources to honestly perform the amount of witnessing required per their procedures. As a result, “witnessing” becomes a compliance activity that holds little value, and is the first thing to be skipped when the pressure is on (Friday afternoon?). When detected by a regulator, this is considered ‘falsification’ or records, a serious charge that questions the documentation throughout the entire site – as it will likely be interpreted as a cultural practice (think ‘iceberg analogy’).

Secondly, a witness does not realistically reduce the risk of a mistake occurring, only the ability to [maybe] detect a mistake as it occurs. “Maybe” is the reality – due to the first point -> lack of resources. It is unlikely that the witness has the time/energy to catch the mistake as it happens, therefore witnessing becomes a compliance formality rather than a meaningful activity. So the question is: what value does it bring to achieving the common aim of the organization? Not much… Why expend massive resources to [maybe] detect mistakes vs. investment in mistake prevention instead? Simplify and streamline, using your existing technology to its full potential: this should be considered ‘plan A’.

Scenario: You might be worried that the operator does not enter the action contemporaneously into the batch record, therefore have a “verifier” e-sign alongside the operator – despite system audit trails capturing the time/date at which the action was recorded in the background, and could be used to re-create the GXP activity if any concern arose that could be linked back to non-contemporaneous record keeping. This verification makes little sense, unless we are talking about a lack of QA trust in operations staff due to previous mistakes. This breakdown in trust can easily happen when mistakes occur, so we must be careful and think objectively. Typically, the preventative action should not be to increase witnessing, but rather to GEMBA the workflow and identify opportunities to reduce mistakes through simplification and streamlining – taking full advantage of your existing technical solutions (in this case eBR): a much more effective CAPA vs. additional workload piled on operations…

In summary: treat witnessing/verification as a last resort. First try simplify and streamline using your existing technology as the “witness” and see how it works out. My bet is a notable example of continuous improvement…

Pete

November 10, 2025

Common Goals

Production is the economic engine of the plant. Quality is the patient representative (with ultimate authority) who acts as a coach, guiding production to achieve the highest quality product at the lowest price – maximizing both profit and reputation. The best coach is one who provides guidance and direction to achieve the common goal, in our case achieving this goal while working within the regulatory framework. Any activity introduced by the coach that is not aligned with the common goal nor required within the regulatory framework should be avoided. When approached objectively, the two departments should align more often than not. The problems arise when there is no clear understanding of risk acceptance within the Quality Unit - any risk identified during discussions is viewed as a regulatory noncompliance (gap), when in fact this is often not the case. QA is uncomfortable with risk, then attempts risk mitigation (which is not risk reduction) via additional procedural controls. Technical and engineering controls are typically the only the means for risk reduction, but are not always feasible. I would argue that in the majority of such instances, the best mitigation (when reduction is not possible) is a focus on simplification of the workflow rather than adding procedural steps that do little to achieve the common goal.

One massive source of quality problems (as we well know) come from mistakes - therefore we must focus on a reduction in mistakes through process excellence as “plan A” when risky (usually manual) operations are identified. Adding procedural controls (e.g., witnessing/verification) often increases the chances for mistakes (due to limited resources), inadvertently introducing quality problems despite good intentions.

To my QA friends & colleagues: before you roll out the next phase of your DI journey as an action item resulting from the new EU GMP framework – make sure your processes and mindset around risk mitigation are aligned with the principles of process excellence and a reduction in mistakes, otherwise the common goal may be difficult to achieve!

Pete

October 31st, 2025

Hybrid Systems

And Happy Halloween!

One controversial aspect of the new (draft) EU GMP Chapter 4 – which will also become a PIC/s Guidance once finalized, is the concept of the “hybrid system”, which is mentioned a total of 19 times in the document. I would like to provide my perspective on the hybrid system debate here, as it continues to be a source of significant regulatory confusion nearly 30 years after publication of FDA’s 21 CFR Part 11. Surprisingly, the question: “are hybrid systems acceptable in 2026?” is still a thing…

Part 1: To begin clarifying the murkiness, we must first define the concept of a “system”. FDA provided a clear outline of the “system” in their 2018 DI guidance, as follows:

“Computer or related systems can refer to computer hardware, software, peripheral devices, networks, cloud infrastructure, personnel, and associated documents (e.g., user manuals and standard operating procedures).”

A “system” is simply the sum total of arrangements that make up a GMP workflow, such as the offline testing of a sample for pH in the laboratory, which is a combination of software, hardware, personnel and documentation. This is likely also considered a “hybrid system”, as there is likely some paper documentation involved, and manual sample/instrument actions. Fully automated online pH measuring would not be considered a “hybrid system”.

A hybrid system is defined in the EU GMP as: “A combination of paper based and electronic means” to achieve a state of process/workflow validation. This is fully aligned with the previous FDA Guidance from 2018: nothing has changed here. A significant number of our GMP workflows are in fact “hybrid systems”. The number is reducing as automation accelerates, but they are unlikely to disappear anytime soon – hence the inclusion of the concept in the new regulation.

Part 2: Next, we must clarify the concept of “validation” – again there is significant confusion here. The regulators define validation as establishing by objective evidence that a process consistently produces its predetermined expectations. In 2026, this will mean providing the workflow data/process map, workflow risk assessment, and historical data demonstrating consistency. Note: historical data may not be available for new processes evaluated during pre-approval inspections. How much detail contained in the map/assessment and how much consistency (variability) are expected by the regulator, of course, depends on the intended use (criticality).

Part 3: Now let’s answer the question… Can the regulator do their job to protect public health if the data/metadata being submitted to demonstrate process consistency is in the form of paper – manually recorded by humans according to a training program and supplemented via good old fashioned trust? All historical data meets specifications, so nothing to see here… Is the “hybrid system” considered validated and ultimately acceptable if the site demonstrates consistent historical data meeting specifications outlined in the dossier, along with a process designed to include high risk paper-based controls? As usual, there is no right or wrong answer here… it depends.

Acceptability in 2026 really depends on what aspects of the hybrid system are paper-based. Most companies utilize a paper logbook to document unscheduled maintenance or troubleshooting, despite an extensive electronic audit trail: a “hybrid system”. This is due to the lack of interface between the production/lab and maintenance software programs. This facilitates traceability between the two systems, and will be needed to manage the risk of unauthorized system access until full digital convergence is achieved (not anytime soon).

On the other hand, some sites still utilize paper logbooks to supplement significant gaps in their production/laboratory assets, such as pH meters without the capability to save historical results (creating a printout as the “original record”). Defending workflow validation in this hybrid system will be nearly impossible in 2026, only maybe in the lowest criticality scenarios? Even the greatest control chart demonstrating historical consistency will be viewed as a waste of their valuable inspection time: “thanks for the information, feel free to submit it within 15-business-days.”

The key to understanding the acceptability of hybrid systems is understanding how the regulator views data risk – which is a prerequisite to demonstrating ALCOA+: a good explanation of which is provided in the new (draft) EU GMP Chapter 4. Specifically, the inclusion of the term “vulnerability” as an important dimension of risk. If a hazard related to accuracy or completeness of data (e.g. orphan data) is considered high due to lack of “appropriate controls” (significant vulnerability), the workflow will not be considered validated, despite beautiful PowerPoint presentations and 100% historical product acceptance. Again – feel free to respond in writing within 15-business-days. Don’t even begin to pretend you haven’t been warned.

Part 11 was published in the “late 1900’s”… c’mon now.

Pete

September 28th, 2025

The Bad Dream

Let’s Wake Up!

I’m sitting here on a flight en-route to EWR, and noticed a few birds flying by the window at ~5,000ft… this got me a bit freaked out and thinking about risk, so here we go!

As an industry, we must get over this unwarranted fear of risk. Risk is most often viewed as an unacceptable compliance issue, but in most cases this is simply not true. I cannot stress this enough. Processes involving humans performing manual actions will always be high risk, just like the best BBQ will always be in Texas. No one can argue with those two facts. Human involvement in our GXP workflows isn’t going anywhere anytime soon, it’s “industry standard”, so we must wake up from our dream-like-state and start to address our collective reality. Our workflows contain high, medium, and low risk steps, this is the reality - the same as crossing the street or driving a car inherently include various levels of risky hazards. You will agree with me that ignoring this reality while crossing the street is to your own peril. The exact same scenario in any given GXP workflow = ignore the reality of risk at your own (and unfortunately the patient’s) peril.

Management expects the righthand side of the assessment to be “all green”, by whatever means. This is an unfair expectation – a more realistic management expectation would be to produce the next batch on Mars. As a result, the assessment team struggles, argues, and ultimately formulates some form of electronic wallpaper to appease management and demonstrate “compliance”. Layers upon layers of procedural controls are dumped on front-line operations, and unsurprisingly operational deviations are not reduced… and FDA 483s continue to roll-in. I will [almost] always argue that traditional procedural controls such as additional paperwork achieve the opposite of their intention – and in fact increase risk [and deviations] through distraction and wasted resources.

I will argue in this blog that when a high-risk step is identified – the only means to reduce this reality is via reducing the “vulnerability” of the hazard [see Annex 11], generally through designing in technical/engineering controls. This takes time and investment, so in the meantime – focus your energy on employee engagement and empowerment instead. Additional layers of paperwork are viewed as “micromanagement” by production staff, and crush any cultural momentum. Don’t go down that path – it’s a dead end.

Check out section 10.2 of the new Annex 11:

“Where a routine work process requires that critical data be transferred from one system to another (e.g. from a laboratory instrument to a LIMS system), this should, where possible, be based on validated interfaces rather than on manual transcriptions. If critical data is transcribed manually, effective measures should be in place to ensure that this does not introduce any risk to data integrity.”

In this case, the regulator is not helping the situation… How can a site demonstrate that manual transcription (vs. a system interface) does not introduce any risk to data integrity? Impossible - so as result the old-habits will resurface. The knee-jerk reaction to this expectation of not introducing “any risk” to data integrity is going to be layers of extra paperwork, witnessing, and distraction.

Consider this instead: controlling risk through mutual respect. I trust and respect you to do your job so that our products are the best quality at the lowest price. If you deviate from the standard -that’s OK, bring it up and propose improvements. Management is listening! The reality is that the patient depends on the person to perform the manual transcription correctly (this is a fact), so instead of overcomplicating the process - set them up for success by removing distraction and permitting flow. Additional paperwork is a false sense of security and disrupts flow – again, we need to collectively wake up from this bad dream!

You might as well give it a try, because the status quo [obviously] does not work. History is an excellent teacher, so let’s start embracing the principles of Lean theory: especially the two main enablers: 1) respect for employees, and 2) continuous improvement. Start with (1), and (2) will follow organically. Here is my proposed roadmap:

1) Wake up from this bad dream,

2) Eliminate unnecessary procedural layers and focus on flow vs. “all green”,

3) Establish mutual respect between operations and management,

4) Enjoy the journey.

August 29, 2025

GXP & Lean

Are they compatible?

As many of you who study the principles of lean theory already know, the key strategy that forces continuous improvement and investing in employees is the one-piece flow cell (continuous manufacturing). Employing a one piece flow forces management to rely on continuous improvement and the ability of their employees to solve complex problems rapidly as they happen, due to no build-up of inventory. No inventory between process steps means a deviation in the workflow causes a paralysis of the line and idle downstream workers. It is definitely a risk and will most certainly cause short-term pain, but the long-term payoffs are immense: higher quality products, cost savings, better employee retention, and facilitating a [real] great place to work. No need to add any references or case studies here, this has been proven over and over again since the 1950’s.

The issue I would like to address in this blog is that most of us working within the GXP world do not deploy one piece flow cells (this can also be services/etc., it does not need to be a drug component/substance/product). In fact, a one piece flow may not always make sense in our GXP universe, due to a variety of complex factors. As a result, however, we are not forced to focus on the two key pillars of lean:

Continuous Improvement

Employee Engagement and Empowerment

As a result, we need to find another ‘forcing function’ that directs management to treat the two key pillars above as necessary for survival. The regulators are well aware of this reality, many of which are masters of lean theory, and have as a result tried their best to update regulation (Annex 11) and guidance (QMM) to ‘force’ us to embrace the components of lean where we can. Lean processes are not an ‘all-or-nothing’ endeavor. We can extract the strategies (pull vs. push) where we can, and just do the best we can with what we have.

Data Governance, written into the new Annex 11, EU GMP Chapter 4, and ICH E6(R3), is a theory that ‘forces’ us to embrace a “risk-based approach” to GXP workflows. When the site is able to create a mental map of a “risk-based approach” via data governance and lean theory, they will find serious overlap. In fact, I would argue there is no principle of lean that cannot be deployed within a “risk-based approach”. You may never be lucky enough to work within a truly lean organization deploying continuous flow cells.

That’s OK, if you instead replace this (continuous processes) with the common aim/key corporate strategy of data governance, which if understood at its core (join us in an upcoming workshop to explore the concept in depth), will ‘force’ management to embrace the two pillars listed above as necessary for survival.

August 9, 2025

Why Annex/Part 11

Why the core GXP regulations are not enough

There is much chatter on LinkedIn and within the Pharma Community about the revisions to Annex 11, which will automatically also become a harmonized worldwide PIC/s Guidance following the final revisions. Regardless of what you think about the content of the newly proposed regulation, in this blog I would like to address the “why” behind the need for specific regulation for GXP data collected/reviewed/etc. within electronic systems. Shouldn’t the EU GMP chapter 4 (Documentation) or FDA’s GCP found in the 320’s be sufficient to provide assurance of product quality and patient safety? Why do we need extra regulations that are so specific…?

My favorite quotes for defending any regulation (at least in the US) are found the “preambles” published within the Federal Register – an incredibly boring but yet still somehow interesting ‘magazine’. Random side note: do kids these days even know what a ‘magazine’ looks like? One of my all-time-favs is the Part 11 Preamble, published in 1997 (notably the same year that DVDs launched in the USA, remember those??). So, without further ado, enjoy the read:

Federal Register / Vol. 62, No. 54 / Thursday, March 20, 1997

“Absent effective controls, it is very easy to falsify electronic records to render them indistinguishable from original, true records. The traditional paper record, in comparison, is generally a durable unitized representation that is fixed in time and space. Information is recorded directly in a manner that does not require an intermediate means of interpretation. When an incorrect entry is made, the customary method of correcting FDA related records is to cross out the original entry in a manner that does not obscure the prior data. Although paper records may be falsified, it is relatively difficult (in comparison to falsification of electronic records) to do so in a nondetectable manner. In the case of paper records that have been falsified, a body of evidence exists that can help prove that the records had been changed; comparable methods to detect falsification of electronic records have yet to be fully developed.”

August 5, 2025

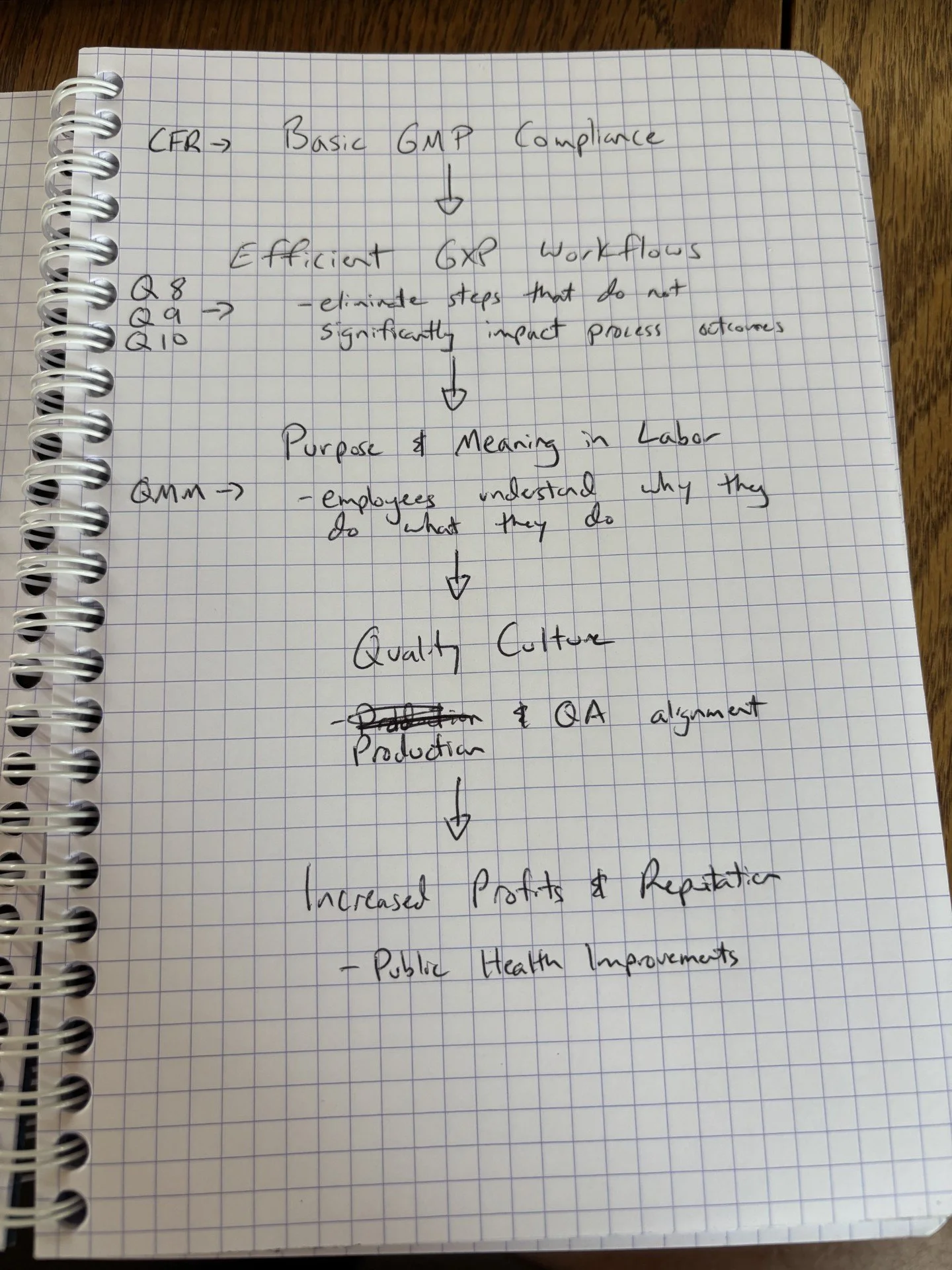

Quality & the Business

Quality Management Initiatives in the Pharmaceutical Industry: An Economic Perspective

CDER has just released, with very little fanfare, the next publication in their Quality Management Maturity (QMM) series, titled “Quality Management Initiatives in the Pharmaceutical Industry: An Economic Perspective” which is a pretty big deal! This is not considered a ‘Guidance for Industry’, but rather a ‘Whitepaper’ in line with previous QMM publications and others such as Drug Shortages. The primary objective of QMM has and will remain reducing shortages, attempting to argue the business case for Quality and healthy market competition. Whitepapers are typically released to address ongoing public health challenges with incentives vs. outlining minimum expectations, as in traditional Guidance Documents. This one appears to have been largely generated by an AI assistant – probably Elsa, as there are likely terabytes of data on the internet about Quality Management and the business case for Quality (just not necessarily from our industry, unfortunately…).

In this case, the incentives presented by CDER are clear: if you focus on the ‘old school’ principles of Quality Management, financial benefits come naturally. Quality and Profit do not need to be opposing forces. ICH Q9: Quality Risk Management (QRM), was published some 15+ years ago and is a model summary of ‘old school quality’, but still (despite recent revisions) remains bare bones and does not include any practical framework to apply its concepts. Perhaps this Whitepaper is an attempt to provide some structure for how to apply QRM in GXP, as the “Lean Six Sigma” manufacturing theory is mentioned multiple times. Lean Six Sigma is a very specific framework for reducing process variation, and can be very effective when applied to largely automated processes or assembly lines (e.g., FMEA is a primary tool, which results in disaster when used without supporting data sets). Lean, on the other hand, is based on more qualitative tools, and is better equipped to handle manual and hybrid processes, such as those found in abundance in our industry.

This lack of structure within Q9, which is expertly written to address both automated, hybrid and manual processes, is likely the reason many companies have left it on the shelf to accumulate dust, or treated it more as a checkbox compliance activity rather than a guide to achieve excellence in manufacturing. Most GXP management has nightmares of acknowledging GXP process hazards, preferring instead to keep risk in the shadows and instead blame the worker (human error) for any quality lapse. This is absolutely 100% prohibited in Lean theory, as problems must first be attributed to the process design before any human error can be considered.

I have personally not recommended any one Quality theory to adhere to, preferring the flexibility that comes with simple tools and critical thinking – but maybe it’s time to adopt a more structured and formal approach to QRM? Maybe a structured approach, with study materials and certifications, will finally push us in the right direction? I remain skeptical that this approach will work on a large scale within the GXP world (as the tools are too formal). I think the basic ‘old school’ 1950’s Lean principles, combined with Q9, may provide enough structure, without the “six sigma” addition.

The concepts of Data Governance, outlined in the new PIC/s Guide and associated Annex 11 regulation, provide this middle-ground structure vs. flexibility answer we need. Make sure to join us in an upcoming workshop as we discuss the structure and theory necessary to get started, with the goal of long-term compliance/quality and profitability!

Pete

August 1, 2025

Golden Batch

really?

In the cafeteria during a recent on-site visit, I observed staff indulging in a wonderful buffet including a full spread of appetizers, mains and deserts… along with some nice drinks. Of course I had to inquire: what’s this all about? The answer was that the site had recently achieved a “Golden Batch”. I congratulated the team on a job well done, but the GXP Quality side of my brain had some serious lingering concerns, which I chose to keep to myself, obviously… Let’s get into it here.

The idea of a Golden Batch is a popular concept within the lean manufacturing camp (I am permanently camped out here…), and can be roughly defined as ‘the ideal production run where each parameter that may be susceptible to variability such as materials, temperature, pressure, mixing speed, time, etc., meet their ideal values within the specification range to produce the highest quality product with the lowest waste and therefore lowest cost.’

Problem #1: Most of us do not know the ideal range within our specifications that achieve the goal of highest quality at lowest price…

Problem #2: The definition is silent with regard to deviations. In fact, a Golden Batch in lean theory can still experience multiple deviations during the production run. The Golden Batch is all about measuring process/parameter variability (it is solely process parameter data-driven)! Unfortunately, however, for whatever reason we in GXP have also tagged on the idea of “zero deviations” (this makes me cringe), which is the exact opposite of the lean mindset. This is added goal is excess overweight baggage, and will incur significant penalties! To be fair, in theory, this is not a bad endeavor, but it requires a very, very high level of QMM to realistically achieve its goals within the highly regulated GXP manufacturing space. Without a mature Quality Mindset, the “Gold” in Golden will be as elusive to your organization as a leprechaun’s treasure at the end of the rainbow (for reference: it’s not real).

Why? Because in my experience most GXP manufacturing sites still treat deviation investigations, for example, as a failure (negative metric) instead of an opportunity for growth. Deviations as a key ingredient for continuous improvement and growth is a foundational principle for lean manufacturing sites. In their perspective, it would be impossible to achieve a Golden Batch without a mature investigations process… This perception of a negative metric within the GXP community, combined with the rewards that come with the Golden Batch, are a recipe for disaster in sites that have not yet set foot on FDA’s QMM ladder. These sites may skip the hard part (continuous improvement over months/years) that is an absolute prerequisite to achieve the Golden Batch. Anyone can achieve a Golden Batch (on paper), but does it really meet the spirit within the lean mindset?

Pete

July 20, 2025

Quality Culture

abstract or tangible?

Today, culture is on my mind. We hear much talk on the subject, but I personally dislike the way we often use the term in the abstract when applied to GXP organizations. Culture does not have to be an abstract concept; simply unable to be measured. I find senior leadership may at times use culture as a distraction by treating it as something that is felt rather than realized. Treating culture as abstract feeling dilutes its significance and can be used an excuse not to focus on tangible results that may actually realize the defining nature of culture: summarized as ‘purpose and meaning in labor’. When we humans find purpose and meaning in work, only then can a common aim among various departments be realized – typically safe and effective medicines. We achieve purpose and meaning in labor by ensuring any action required by an operator or analyst has a reason behind it, and that reason is because it in some way impacts a patient.

Developing SOPs that ensure tasks are meaningful is not easy. The easier route is volume - simply do everything, which will inevitably also include the meaningful tasks. Inspections will come and go without much disruption, but so will valuable employees… For these organizations that focus on volume rather than meaning, culture must be spoken of in the abstract, because it will never be realized. Volume is the enemy of quality culture… Luckily, a clear roadmap to eliminate unnecessary tasks has been published by the regulators years ago!

A simple and tangible roadmap to culture is sketched out below: it’s not too difficult – so get onboard!

Pete

June 27, 2025

Sounding the Alarm

AI Chatbots and GXP Compliance

It’s 7am here in Utah, and already the sun is bearing down - time to get some thoughts on paper before the heat truly sets in!

In my recent Investigations & CAPA workshop, the question was posed something to the tune of: “is it OK that we use our enterprise chatbot for assisting with the drafting of deviations text?”. “Sure”, I replied – “AI assistants can assist with deviation management in a variety of ways, from historical trend analysis to root cause analysis, among others”. As this particular workflow is meant to primarily comply with 21 CFR Part 211.192 [investigations into unexplained discrepancies], I obviously had to follow-up with the following statement: “what documentation might you have available to the regulator to demonstrate a validated workflow?” Note deliberate wordsmithing here, I am referencing Process Validation Guidance, as the introduction of an AI assistant to meet a GMP predicate rule certainly needs controls to ensure a “high degree of assurance” that the workflow will produce an outcome commensurate with its risk to product/patient.

The PIC/s Guide for Data Management gives us an excellent roadmap here in its outline of the principles of Process/Data Governance [design – operation – monitoring]. If I was the regulator, I would simply ask the site to demonstrate each of the 3 governance principles have been met and the site has a clear understanding of the validated workflow. I would already have ‘targets’ in mind that must be answered during the inspection, here are just two of several:

Inaccurate Information: inaccuracy and hallucination is a well-known risk associated with the use of chatbots: making up information that is not true. Anyone who has used Chatbots knows this happens on a regular basis (I have to admit that I recently purchased an incorrect bike tube based on wrong information that the chatbot proposed as a statement of material fact. It was incorrect and I am now stuck with a useless tube, I should have verified with the user manual or Reddit but that takes time…). The root cause of this risk is difficult to pinpoint and even more difficult to control.

Inspection Strategy: ask the QA associate if all the facts included in the deviation text are statements of material fact. As they may have been drafted by the chatbot, did they perform interviews with staff to confirm the statements and conclusions made by the chatbot? Did the associate account for any recent process changes, as the model is proposing details based on an averaging/weighting of historical [and potentially outdated] knowledge (is unaware of the larger quality system, unlike the human). This is unlikely, because the convenience of using a chatbot makes assuming the accuracy of the model output too easy [going to interview staff takes time, for example]. Here I start to lose confidence in the ALCOA of the deviations data (specifically accuracy). Don’t blame the QA associate for this, it is simply human nature to take the path of least resistance, blame the head of QA or CDO for failing to understand the principles of ICH Q9… Assuming the accuracy of chatbot outputs is a disaster in the making – in my opinion it is only a matter of time until the recalls and regulatory actions start. Inaccurate data in deviations has always been a risk factor considered during inspection, but was not a main concern as it was very difficult to cite on a 483. In the future, it becomes easy if clear instructions on how to control the risk from inaccuracy and hallucination is not clearly identified (design stage), controlled (operations stage) and regulatory evaluated (monitoring stage).

Bias: As more repetitive information is fed into the data set and the model is automatically and/or manually fine-tuned, an echo chamber of repeating information is a risk factor that will occur if not governed. This is one manifestation of bias, among others. This risk is closely related to inaccuracy/hallucination, but it is important to break it out as a separate hazard as it must be controlled differently.

Inspection Strategy: request a list of root cause conclusions, and look for repeats. Well designed and controlled AI models are decent critical thinkers, but this takes expertise and risk management from the admin to the operations level. Without this expertise and active control strategy, bias is almost certain to manifest itself in repeated information/conclusions. Has the staff considered all potential relevant contributing factors prior to signing off (per the SOP), or was the model output assumed to be correct (or the SOP is silent). The PIC/s guide for inspecting a risk management program (a little known but excellent guidance) proposes 3 main observations in the universe of risk management, one of which is termed “unfair assumptions”. Here is where this becomes easy to cite.

Historically, these two bullet points have been cited countless times in FDA 483’s, obviously. The difference is that the scope of the observation is limited (2-3 deviations, or a small cluster considered deficient). The risk to the patient has always been present, but the diversity of humans in QA has mitigated the risk via limitation of scope. In the world of chatbots, the scope may be considered “all”, todo, tous, alle… Yes, the human is still in line, but can they still be considered an adequate risk control measure? I think it is time to sound the alarm, as there are currently an absence of ‘effective control measures’ in place and the entire deviations program is vulnerable to collapse during an external inspection. The ungoverned use of chatbots is reckless, and ultimately unfair to the patients we serve. We can do better.

Pete

May 26th, 2025

Paradox of Choice

less is more

When executing a risk assessment, the scales/standards used to define the ‘severity’ and ‘vulnerability’ values for any given hazard [to determine the risk] is a key starting point. Get the scales wrong, and the whole effort is likely for nothing, causing your organization to lose confidence in the theory of a ‘risk based approach’ being better than conventional decision-making after several regulatory (or internal) observations on the trot. The organization will likely revert back to the practice of generational pass-down of knowledge via storytelling, much like we [humanity] have done for millennia. Entertaining, but incredibly inefficient. For example: ‘we did it like this before, and never received an observation’… sound familiar? Cool story bro! Ugh, please get me on the first flight back to Austin.

There are several key points to understand when designing the assessment scoring scales. However, in this blog entry we will cover just one key design element: simplicity. As humans, we are prone to the cognitive challenge defined as the “Paradox of Choice”. How many of you have struggled to decide on what to eat at the Cheesecake Factory? As of today, they have over 250 potential choices on the menu – which one is good, which one is the best… what should I order? Impossible to determine.

This decision-making problem is defined as the Paradox of Choice. Once a human is presented with a large number of potential choices, two things happen:

1. Mental exhaustion leading to paralysis

2. Dissatisfaction with the end result (as a result of second-guessing)

Maybe this is why I avoid the Cheesecake Factory like the plague? Probably not only for that reason, but I digress…

Within a highly regulated environment, where we deal with life/death decisions, choosing the wrong option is scary, hence we strive to find the “right answer”. Unfortunately, however, there are no right/wrong answers in assessing risk, as determining future (or past) performance of a machine or human is an impossible task – all we can do is get close to the right answer (hence my 100% belief in qualitative vs. quantitative assessments). I consistently come across scoring scales that have 5 or more potential options for both severity and vulnerability (vs. the core three: high/medium/low). It appears that these folks have fallen into the fallacy of “more is better”. Somehow more scientific, more compliant? Wrong, that’s old school. In this case – less is better. In order for the critical thinking side of your brain to kick into high gear, it needs 1) energy, and 2) confidence. When presented with too many choices, it shuts down and hibernates until recovered.

So what can we do about it, Pete? I argue in this blog that three things will happen as a result of simplification of the scoring scales (high, medium, low):

1. More participation in the QRM program due to less mental exhaustion

2. More confidence in data governance outcomes due to less second-guessing and wondering if the wrong choice was made (…should I have ordered the tacos…?)

3. Easy inspections – people know what they do, and [more importantly] why they do it.

Pete

May 22nd, 2025

The 80/20 Rule

as it applies to QRM…

Initiating a data governance project at any given site around the world has to start with a problem statement. Without this, the project will likely die a slow and painful death because no one is really sure what was the project was all about in the first place… Folks will likely think (or even verbalize): “don’t we already have a data integrity program in place”? The answer is likely yes, it just isn’t very good. In reality, no one is happy with the level of audit trail review, data reconciliation, or witnessing burdens that are placed on staff members. In my experience, the problem statement is [nearly] universal: the current DI program is not truly “risk based” and therefore likely failing regulatory expectations: hence the continuous stream of 483s citing deficiencies to 21 CFR Part 211.68. Semi-structured DI checklists simply fail to do the job we ask of them (it’s not their fault, don’t blame the checklist!) due to their failure to connect with the process “workflow” (see FDA DI Guidance Q3). On this blog page, I have already written about the two tools used to establish data governance: 1) data/process mapping and 2) qualitative risk assessments using the principles of a) severity and b) vulnerability. In our data governance workshops, we now use AI tools to assist with rapid generation of data/process maps, which is a game changer… I am not going to address this point in this blog entry, but rather the second, more difficult tool: qualitative risk assessments. AI can assist here as well, although the human is much more integrated and critical the process outcome.

The purpose of this blog entry is to address the main roadblock to achieving rapid/agile risk assessments: the problem of when the risk assessment complete? If you were tasked with performing a risk assessment for driving to the grocery store, you could identify, evaluate and attempt to control an unlimited number of hazards… For example, the hazard: “large hailstorm forms and shatters windshield”. You could, in theory, evaluate the risk, and put in controls in place such as install bullet-proof glass. This would reduce the risk of this hazard causing a failure to the process. The list of hazards is in reality to infinity and beyond, however, this would cause what we call “perfection paralysis”, and your refrigerator would quickly run out of goods (unless you live in a city with a delivery service).

As a result of this risk-reality, we as humans often follow the 80/20 rule, including within the science of project management. For example, 80% of a project’s benefits are likely achieved via 20% of the existing efforts. The remaining 80% of effort is only achieving 20% of the project’s benefits. In the GXP realm, this is also likely to be true! Most of our patient safety/quality assurance is achieved via just a few actions performed by operations/QA staff. In the DI world, this is simply those actions that ensure the 1) accuracy and 2) completeness of data.

So how can we take this fundamental and well established principle of project management and put it to use in our data governance program? The answer is clear, focus on the big stuff, and don’t sweat the small stuff (sorry for the cliche but it is just too appropriate!). Remember that the majority of your risk is covered by evaluating just a few process hazards, and that is sufficient. If you have a gap in the big stuff (e.g. lack of access controls), focus your limited resources on fixing those using innovative and possibly non-traditional GXP technology, instead of wasting efforts on an encyclopedia-style risk assessment that burns the midnight oil and leaves everyone exhausted. There is no gas in the tank to fix - it was all expended assessing… If you miss something small, don’t worry - trending, deviations, or CPV will catch it, and it can be addressed then and there - therein lies the beauty of a lifecycle approach to validation. Remember that the vast majority of risk exists within just a few hazards, and you are not likely to miss those. The patient expects medicines that are safe [obviously] but also affordable. Keep the 80/20 rule in mind, and your organization will thrive. Give yourself a timeframe to complete the risk assessment (my advice = 4 hours maximum) - and stick to it.

As a Texan, I am [obviously] a country music fan. If you need a little motivation here, just listen to the incredible song by Kevin S. Wilson - Don't Sweat The Small Stuff. Listen as a group before you start the risk assessment, you will crush it!

Pete

April 25th, 2025

Critical Thinking

what it is not…

It’s starting to heat up here in Austin, hence spending the afternoon indoors… thought I might get to some writing! Lately I have been doing some research into the concept of “critical thinking” – as I would like for it to have actual meaning in my workshops and not be automatically dismissed as generic or cliché. Treating critical thinking as a “buzz word” would be tragedy, as the concept is essential for success in the new world of automation and big data management. The definition for critical thinking varies widely between publications, it seems there is no universal definition nor even a largely harmonized view. Here is a definition that I think sums up the compilation of viewpoints I have reviewed:

“Considering both what you know and what you don’t know prior to decision-making, with the goal of producing an objective outcome”

The word “critical” comes from the Greek “kritikos” – meaning something to the tune of “to discern”. This is not to be confused with the act of being critical of an idea or proposal… this is much different! In my experience, this is one of the major roadblocks in our industry, the confusion of being critical vs. being discerning… During cross-functional meetings, we often find problem solving difficult because we lack a structured process for discerning the issue at hand, and as a result the project is delayed or just plain cancelled as a consensus cannot be reached as we have no means to deal with critical viewpoints/roadblocks… (hence ancient pH meters floating around out there lacking any sort of data management, the use of flat files, paper-on-glass, annotations… OK I will stop there). The roadblock might be legit, but it must be backed up with objectivity, or it will be taken as an opinion and the project begins to deteriorate quickly.

My recommendation, if your goal is to be a problem solver rather than be seen as a problem creator is to focus on developing a structured approach to discerning the problem at hand, considering what you know and what you don’t know prior to taking any position or especially prior to throwing up any roadblocks. Can you make an objective contribution considering subjectivity in the equation? Yep. ICH Q9 is always there as an excellent and free resource, and of course comes with the regulatory seal of approval if the fabulous four Q9 concepts (criticality, risk, severity, probability) CRSP [pronounced “crisp”] are utilized according to the guidance - or for an even simpler approach, a simple list with two columns works like magic.

Pete

April 8th, 2025

Common Sense Quality

an epic adventure

Waiting for a flight here in Colorado, and finally have some time to sit down and write! I spent the weekend snowboarding, and had quite a bit of time to think and reflect while on the lifts up to 12,000ft. 2ft of fresh powder was a nice added benefit!

Growing up in rural Oregon, we really only had one mountain that was doable for a day trip: Hoodoo. It’s a small family-run “resort” with a few old-style lifts and not much else going on. Because of its small size, to achieve the sense of adventure that we craved as kids, we had to spend time exploring the out-of-bounds backcountry. We would take the lifts up and then disappear, spending hours out there finding new runs, building jumps, and hiking around in the snow. Over time, I learned that to be a decent backcountry snowboarder, risk management is critical. Split-second left or right decisions will make or break (literally) your day.

Reflecting back on these early memories this week, I realized that the best choice (right or left) while ripping down through the trees was always the path of least resistance. You could go the harder route, but you would often end up getting buried in a tree well and calling your buddies to help dig you out. Initially it seems like a better adventure, but turns out to be no fun for anyone. As I grew older, I learned that the harder routes rarely paid off in our quest for adventure. Significant delays, loss of energy, and overall less enjoyment/adventure (not to mention possible injury).

I feel that we in the GXP world are still learning these lessons. We often take the path of greater resistance, but instead of seeking adventure we do it in search of compliance. It has been my experience, however, that compliance is most likely achieved when we take the path of least resistance. In 2025, GXP compliance can most accurately be summarized by extracting a common thread from FDA Warning Letters: “vigilant operations management oversight”. There is no way to vigilantly monitor complex and subjective workflows… impossible. Many folks agree with me, in theory, but have trouble making the jump and implementing lean GXP workflows, likely do to fear. Overcoming this fear can only be achieved via practice, just like back-country snowboarding. Start small, run a few pilots… Demonstrate the patient and business benefits (they will fully align if the proper resources are available), and start a cultural shift to the path of least resistance. In our governance workshops, we use a phrase to summarize the goal: “Common Sense Quality”.

With practice and determination, you will find that common sense quality relies on developing GXP workflows that choose the path of least resistance (Qualification/SOPs/training/etc…). Why are operators required to “train” on 14 random SOP’s per week? This is an example of the opposite of common sense quality, it is a company lost in the woods. These operators will likely get stuck in the trees and require rescuing! On the other hand, vigilant operations management oversight of the training program will result in assigning only the SOPs relevant to their workload, and supplement others via optional modules and/or periodic all-hands where site-wide trends are addressed.

Don’t be afraid – explore carving the path of least resistance and common sense quality, the adventure and rewards are epic!

March 31, 2025

Dismantling Validation

for a real digital transformation

Before a meaningful digital transformation can occur within your organization, a full dismantling and rebuild of the traditional ‘validation’ framework is required. As the next generation of employees join our industry, they will likely be dumbfounded by the lack of digitalization and common-sense approaches to quality, and may resort to parallel solutions (and unanticipated risks) if we do not adapt. We must go further than a simple mindset shift – such as “we now take a CSA approach”, which is often a half-hearted effort that will likely result in confusion rather than solution. We need to go all in.

Steps for success:

Breakdown ‘validation’ into its individual components (hardware, software, personnel, documentation)

New definitions (e.g., ‘qualification’) and work streams (e.g., Quality Intelligence) are needed

New risk-management tools are [definitely] required (e.g., qualitative) - no quantitative tools allowed, without compromise

Run a pilot, followed by presentation of the business and GXP perspectives to senior leadership

In my experience, you will find that if your organization is equipped with the mindset and appropriate toolbox, cost and quality have a more nuanced relationship than was historically thought to be true…. Does higher quality require bigger budgets? Maybe not.

To realize that opportunity, however, your origination must approach this issue more like a re-build than a re-model, for those of you who have ever been a home owner…

A simple visual representation with three product streams is outlined below, and for a deep dive, join us in Austin for a week long re-building journey:

February 15, 2025

Risk Approximation

As close to the target as possible

It’s a rainy day here in Dublin, so might as well write a blog entry: so they say…

Yesterday in the car on the cross-country drive I was listening to a podcast on personal finance, and one quote by the guest Ramit Sethi hit me with a significant force. Mr. Sethi stated that a person’s attitude about money has little correlation with the amount in their account. Rather, it is based on a multitude of life experiences starting from a very young age. Interesting.

As a consultant working primarily in the area of data and risk management, I find this statement to be a near perfect description of what we find in our world as well: a site’s perception of process/data risk has little correlation with the actual risk within the process. Just like finance, it is rather based on a lifetime of experiences such as inspections, trainings, pervious company directives, etc. We might call these biases. As an industry, we need to fix this discrepancy, and it starts with establishing clear standards for the factors of risk assessment, as an attempt to replace bias with science. For example, what is the definition of a medium severity score, or a low vulnerability score? Is it vague and therefore based on the assessor’s biases? If yes; not a recipe for success.

Try establishing standards that are as objective as possible. For example, is a technical or engineering control available that can prevent a hazard from occurring? If yes, that is assigned a low vulnerability score. If not, the assessor may not use other factors to achieve the same conclusion of “low”. Nope, no chance. Gone are the days of “faking it until we make it” in QRM; clear standards make this impossible. Faking a risk conclusion is no different than bending the laws of physics… good luck.

A risk assessment is never going to be a direct evaluation of patient risk, it will always be an indirect approximation. Our job is to get this indirect approximation to be as close to the target as possible.

Join us in Austin or Dublin at the Governance Workshop as we work through what good standards look like, and how to achieve both self and organizational transformation. Hope to see you there!

Pete

February 2, 2025

“all sources of variability”

Key to “thorough investigations”

FDA recently published a GMP Warning Letter issued January 15th, mainly focused on “failure to investigate all critical deviations”, among other issues. Warning Letters such as this give us an insight into current regulatory expectations for compliance with 211.192, which are important, as there is little guidance available regarding the expectation that manufacturing events be “thoroughly investigated”. What constitutes a “thorough” investigation in 2025? What does a good root cause analysis look like, and what tools should we use to determine product impact?

This warning letter gives us some strong insight. Although the remediation instructions for the company are not necessarily new, they are an excellent reminder, considering the “C” in CGMP always moves forward - never backward. Here are some takeaways to keep in mind, and may be useful to your organization as an internal maturity check:

Understanding process variability is key to demonstrating root causes.

The letter recommends that for issues where no determinate root cause has been identified during the failure investigation, the company perform a “thorough” review of potential production factors that could have led to the failure. This is no easy task, considering a review of documentation (e.g., batch record) is unlikely to identify an obvious cause. Once the low hanging fruit has all been picked, the next step requires identifying potential causes via “scientific” rationale (the hard part). Listing potential causes here based on opinion or best efforts will be considered insufficient, as it is not scientific. The scientific part here means speaking the language of QRM, but without an understanding of process risk and a knowledge management program to turn to, the team performing the investigation is stuck with nowhere to turn, the hard part is actually impossible!

Finding the root cause and therefore the product impact in these situations is unlikely to happen. The best the team can do is to:

Point to the most likely sources of the failure (via reference to a documented process & data risk assessment)

Evaluate worst case (patient) scenarios for each element

Make the conclusion, being transparent that this is not objective, but as objective as possible considering the circumstances and residual patient risk. Every decision to close an investigation involves some level of subjectivity. That’s OK, the regulators are simply requesting that the decision be as objective as possible, following good scientific practices

Decisions should be “data driven”.

Understanding “all sources of variability” via a process-level granular risk assessment and subsequent targeted data monitoring program (we might call this CPV) greatly enhances the ability of the team to increase the level of objectivity in the final impact statement, not to mention result in meaningful CAPAs, rather than the traditional expansion of work instructions/training and more best efforts at the floor level.

The letter goes on to describe some elements of this transformation, many of which have been outlined in this blog (hence the statement above that this is not necessarily anything new).

In summary, it might be wise to reflect on your current organization’s maturity regarding these two points. Are your investigation teams set up for success in 2025? Do they have a knowledge management program (see ICH Q8/9/10) to turn to when difficult situations arise? Solving the difficult problems requires a quality system transformation, this is clearly evident in the letter. Although you may not be able to transform an entire organization, can you transform your department or division? Start small.

Map out your workflow and identify “all sources of variability”.

Perform a risk qualitative risk assessment using the concepts of severity and vulnerability.

Trace them back to your current process.

Do you have the data you need to monitor variability, if yes - create a dashboard

Don’t get hung up with perfection, just do what you can do within your department, and before you know it you will have a division that serves as the model for the organization! If you are looking for a solution that will achieve full objectivity, I’m afraid I have bad news… Just do the best you can with what you have, and let the future be the judge.

Pete

January 12, 2025

Draft Guidance

211.110 (Process Validation & Control)

Like many in industry, I was somewhat surprised by the publication of the FDA draft guidance earlier this month, titled “Considerations for Complying With 21 CFR 211.110”. My first thoughts were publication due to the need to clarify the phrase “vigilant operations management oversight”, which is often cited in modern Warning Letters when sites lose focus on quality operations. As I read through the document, which is quite short, I found this may hold some truth, but really I found what appears to be a summary of already existing guidance (which are each listed at the conclusion of the document…). I had to read the document a second time to determine if there is any new guidance and/or expectations included. After a second reading, here are some of the key takeaways, in my opinion, although I could argue that these have been around for some time already:

In-process monitoring/sampling should not be considered solely a compliance activity based on traditional manufacturing strategies. The strategy for any in-process sampling/monitoring should be based on a risk assessment and process knowledge. This has been built into the regulation since forever via the use of the phrase “where appropriate”, which is included in both 110(a) and 110(b), we just haven’t been very good at demonstrating what is appropriate. Each process has its own unique characteristics, which should be identified and evaluated according to QRM principles, and not just based on the strategies used since the late 1900’s. Here we find some language largely drawn from the existing data integrity guideline: “Manufacturers must maintain the process in a state of control over the life of the process to ensure drug product quality, even as materials, equipment, production environment, personnel, and manufacturing procedures change”.

This is largely aligned with the components of a validated workflow outlined in the existing FDA DI guidance: “hardware, software, personnel, documentation”. Basically, if you are going to sample, monitor, or both, make sure your rationale for why you do what you do is based on risks that exist within the workflows, in addition to other factors such as chemistry/etc.

The guideline clarifies the definition of a “significant phase”, and follows up the discussion with an example of a process where only minor adjustments are permitted. If effective controls over changes to parameters are achieved via technical means (e.g. access controls), there may not be a need to implement extensive Quality Unit oversight. A simple review of a batch summary may suffice.

I very much like the inclusion of this example, as it backs up the existing guidance provided in the PIC/s guide for Data Governance, and provides a practical example of “review by exception” or a “risk-based approach” to QA oversight. Basically, don’t waste resources on extensive monitoring and oversight if you have invested in process understanding and digital solutions. This action would not be “significant”!

Use innovative means for process monitoring. Options include traditional offline testing (expensive and error-prone), in-process monitoring, or a combination of in-process monitoring and mathematical modeling. The latter is better, if possible!

Advanced Manufacturing is not quite ready to go live, unfortunately. In the final section of the guide, we find additional guidance largely in line with Q8, QRM and PAT existing guidance. Advanced manufacturing is no doubt the future, so what is the delay? It appears that proposals submitted thus far have lacked scientific rationale. For example, a lack of a QRM strategy for an “unplanned disturbance” that may occur during the process. Without a thorough QRM plan established where extensive hazards (planned and unplanned) are evaluated, it is unlikely that we as an industry can move forward in this space. It seems we need more work in this area.

In summary, I see FDA publishing a brief reminder that QRM is not optional, regardless of whether the dosage form has been around forever (e.g. rapid release tablets), and a statement on the current state of affairs regarding future technologies for process control (it’s not ready to go live). I see this publication largely in response to the record number of drug shortages being experienced in the past year… The root causes largely being attributed to “manufacturing and quality issues”. Basically, we need to get better at what we should have been doing since forever = QRM.

Pete

December 1, 2024

Doc Control: when is enough, enough?

While browsing recent FDA 483’s within the Redica database, I came across a 483 (issued September 27, 2024) that may give us an insight regarding regulatory expectations for the management of in-process CGMP documentation that will eventually be used (e.g., uploaded to an eQMS) to support an investigation initiated under 211.192. For example, the use of MS Word, Excel, or even printed blank paper forms to document steps taken during an incident, investigation, OOX, etc… Here is the text, which was cited under 211.68(b):

“General Format documents not controlled or reconciled. These forms can be used in part for DEV/CAPA investigations, assessment/evaluation of CAPAs, and as a form for attaching data printouts.”

In this case, there were no controls implemented for “General Format” forms, meaning they can be easily replaced without a trace. In most cases, firms use Word for such activities, which presents its own challenge! The use of any software to support an investigation (a predicate rule!) would certainly need to meet the expectations outlined in 21 CFR Part 11, just like paper forms must meet doc control expectations, however, we have so far not considered this to be relevant. So where do we currently stand with regard to software and investigations? I’m afraid this is unacknowledged risk…

Many firms use Word/etc. to document the steps taken during the investigation, including a description of the event, evaluation of scope, root cause analysis, product impact, and CAPA. All of this is done without any controls! This data, which in many cases directly impacts a patient if incorrect or inaccurate, is essentially ungoverned. In fact, most firms don’t even mention the use of Word within their investigation SOPs/work instructions!

If this would be cited on an FDA 483, the investigator would need to answer the “so what” question. So here we go, I might write it as follows, cited under 211.68(b):

Appropriate controls are not exercised over computers or related systems to assure that changes in master production and control records are instituted by authorized personnel. Specifically,

Your firm uses MS Word to document CGMP original data collected during the OOX, deviation, incident, and complaint investigation processes. There are no written procedures established to describe the use of MS Word during the investigation process. Additionally, no risk assessment has been completed to identify any residual risk in the current unofficial workflow regarding the accuracy and completeness of CGMP data and/or metadata.

For example, during my review of the laptop assigned to your QA Associate, I noted at least 6 ongoing deviation investigations stored within the “Deviations” shared folder that were partially completed. No audit trail is available to evaluate the creation, modification and deletion of this data.

As a result, the accuracy and completeness of data collected during the investigations process and ultimately uploaded to your eQMS cannot be verified.

Have details collected during the initial scope evaluation been removed or altered to fit the product impact assessment? This may be done with the best of intentions, however, it may have unintended consequences. In my opinion, this is unacceptable residual risk. Even if we were operating in a largely unregulated environment, such as the manufacture of smartphones, this would be bad for business. Senior management should be hungry for the real and unfiltered metrics/state of quality, which originate at the front line but are often diluted and/or biased as they move up the chain to the monthly meeting. Why introduce the opportunity for bias to be introduced via revision of the investigation details without a trace? Nah. We can (and should) do better!

It is time a real discussion in this space is started.

Pete

November 2, 2024

Original Records

The new mullets.

Regarding FDA’s Guide for Data Integrity in CGMP, specifically Question 10: I feel that I need to do a bit of a deeper dive here, as there appears to be a disconnect between how industry and the regulator interpret this very important question. Sorry for the length, but I felt I had to cut/paste the entire section of the guidance into this blog for context. I have highlighted in bold the key words leading to confusion and ultimately unnecessary FDA Form 483 Observations. Here we go:

Q: Is it acceptable to retain paper printouts or static records instead of original electronic records from stand-alone computerized laboratory instruments, such as an FT-IR instrument?

PART 1:

A: A paper printout or static record may satisfy retention requirements if it is the original record or a true copy of the original record (see §§ 211.68(b), 211.188, 211.194, and 212.60). During data acquisition, for example, pH meters and balances may create a paper printout or static record as the original record. In this case, the paper printout or static record, or a true copy, must be retained (§ 211.180).

Thoughts:

“If it is the original record” – this is defined by PIC/s (FDA is a member) as the “first capture of information”. Meaning some computerized systems do not capture and store any data – it is simply transient:

older pH meters that simply display the value and create a printout, but do not store any electronic record of the analysis. The printout would be considered the original record. However, there are not many of these left: we might refer to them as CGMP Unicorns?

“or a true copy of the original record” – the process for creating true copies is defined in section 7.7.5 of the PIC/s guidance. The guide states that creating true paper copies of electronic records is “likely to be onerous in its administration to enable a GMP/GDP compliant record”:

This would require someone to periodically perform a 100% reconciliation between the paper printouts and electronic records. This individual would have to sign/date a worksheet or other record certifying that all data has been reconciled between the two sources (paper printouts and electronic). A second person would follow with another 100% reconciliation, and sign/date accordingly as a reviewer. Then the electronic database could be deleted by the IT administrator according to a written procedure, and the paper records could be considered true/exact copies. This is an “onerous” process, and really doesn’t make much business $en$e in any universe.

Additionally, there are many problems with this “true copy” approach, I will just name a few here:

The 100% reconciliation is taxing on front-line employees, and is a procedural control prone to mistakes. Serious deviations are likely, and will be an easy target for regulators.

There may be metadata that is not available on the printouts, but is associated with the original record. This means that true/exact copies are not being created, an easy observation…

You are demonstrating a quality culture that may have been acceptable in the “late 1900’s”, but certainly not today. It will be difficult for your site to survive much longer as quality transparency becomes standardized under FDA’s QMM…

PART 2